NII-CU Multispectral Aerial Person Detection Dataset

The National Institute of Informatics - Chiba University (NII-CU) Multispectral Aerial Person Detection Dataset consists of 5,880 pairs of aligned RGB+FIR (Far infrared) images captured from a drone flying at heights between 20 and 50 meters, with the cameras pointed at 45 degrees down. We applied lens distortion correction and a homography warping to align the thermal images with the RGB images. We then labeled the people visible on the images with rectangular bounding boxes. The footage shows a baseball field and surroundings in Chiba, Japan, recorded in January 2020.

Highlights

- RGB images captured with a Zenmuse X3 (3840x2160)

- Thermal images captured with a FLIR Vue Pro 640 in White Hot palette (640x512 before alignment)

- RGB images are corrected for lens distortion

- Thermal images are corrected for lens distortion and warped to be aligned with RGB images

- Labels are provided in CSV format, see below

Comparison to other multispectral datasets

| Training | Testing | Properties | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| # persons | # images | # persons | # images | # total frames | drone perspective | RGB: 400 - 800 nm | NIR: 0.8 - 3 μm | MIR: 3 - 6 μm | FIR: 6 - 15 μm | publication | |

| KAIST Hwang et al. (2015) | 41.5 k | 50.2 k | 44.7 k | 45.1 k | 95.3 k | ✔ | ✔ | `15 | |||

| TODAI Takumi et al. (2017) | - | 1.6 k | - | 1.4 k | 7.5 k | ✔ | ✔ | ✔ | ✔ | `17 | |

| NII-CU | 16.7 k | 5.0 k | 2.0 k | 0.9 k | 5.9 k | ✔ | ✔ | ✔ | `22 |

Citation

If you find this dataset useful, please cite the following paper:

Speth, S., Gonçalves, A., Rigault, B., Suzuki, S., Bouazizi, M., Matsuo, Y. & Prendinger, H. (2022) Deep Learning with RGB and Thermal Images onboard a Drone for Monitoring Operations. Journal of Field Robotics, 1- 29. https://doi.org/10.1002/rob.22082

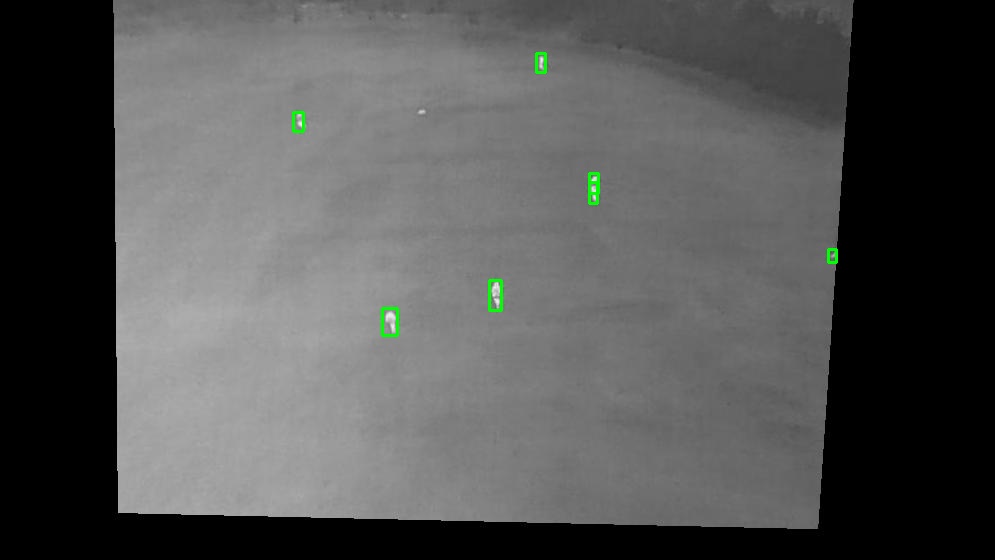

Samples

|

|

|

Download

Labeled image dataset (images+labels): NII_CU_MAPD_dataset.zip (9.1 GB)

Raw video files (unlabeled): NII_CU_MAPD_raw_videos.zip (15.2 GB)

Folder structure

Two variations of the data are provided. The rgb-t folder has the thermal images uncropped and with black margins to match the aspect ratio of the RGB images. The 4-channel folder has a subset of the data, containing only the better-aligned images (type=0), with motion-blur-affected images removed (bad=0), and images were cropped to keep only the intersection of both images (i.e. no black margins on thermal images).

Dataset archive

rgb-tuncropped images, for separate RGB and Thermal modelsimagesrgb/trainrgb/valthermal/trainthermal/val

labelstrainval

4-channelcropped images, for 4-channel models- same structure as above

Video archive

- contains the raw, unlabeled, unaligned video files

Label format

Labels are provided in tab-delimited CSV format, with one file per image pair, and one label per line, in the following format

x1 y1 x2 y2 type occluded bad

Example

4.27 111.52 145.07 371.38 2 1 0

136.53 367.65 435.2 841.48 2 0 0

| Field | Description |

|---|---|

x1 |

left of box in pixels, referring to RGB image space |

y1 |

top of box in pixels, referring to RGB image space |

x2 |

right of box in pixels, referring to RGB image space |

y2 |

bottom of box in pixels, referring to RGB image space |

type |

0 = person visible on both RGB and thermal; 1 = visible only on Thermal; 2 = visible only on RGB |

occluded |

0 = completely visible; 1 = partially occluded |

bad |

0 = good; 1 = bad, e.g. blurry and smeared due to motion blur |

Authors

The authors are Simon Speth, Artur Gonçalves, Bastien Rigault, Helmut Prendinger, and Satoshi Suzuki.

The creation of this dataset was supported by Prendinger Lab at the National Institute of Informatics, Tokyo, Japan, and Chiba University, Japan. We are also grateful for the financial support from Matsuo Lab at the University of Tokyo.

License

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

If you are interested in commercial usage you can contact us for further options.

Contact: helmut at nii dot ac dot jp